Demonstrations-videos-museum

Content:

Introduction (2021)

Videos

1 – Robots with AI – Cognitics in Practice (2007-2013)

2 – Robotics and Natural Emotions (2017)

3 – Industrial Robotics and Classical AI (2018)

4 – Robotics and Cooperation with Humans (2015)

5 – Robotics with Vision for Industrial Application, including Bin Picking (1981)

6 – Robotics with Vision for Industrial Application, including Handling of Overlapping Parts (1979)

Introduction (2021)

This section provides access to a small number of videos and thus aims to reinforce the credibility of the theory presented on this site.

The approach has two components.

The first one presents demonstrations, concretely implemented in the real world, with the perspective of validating the proposed concepts.

The second one situates the achievements in time, spread over a long period of time, more than 40 years, and going back in time to the 70’s of the last century.

Automated cognition, cognitics, is deployed here in a robotic environment; the videos allow us to observe certain representative effects in the case of machines, without requiring the availability of associated hardware and software anymore.

The very innovative character of the produced elements, at their first release, and their relevance, in the scientific and technical field, must thus reinforce the credit to be given also to the other elements of the site, more general, extending towards the concepts of value and collective, towards the human and the life.

Concerning the possible repercussions of our theory of cognition for the vast cases also, of the human and of life in general, the reader will be able to refer to his/her own experience, to his/her own observations.

Videos

The videos of demonstrations are proposed below in an order privileging a combination of importance and recentness (the last of the videos presented below are thus rather old, adding to their scientific relevance a certain historical and museum value!)

The format proposes a title, a brief description, a link to the video, and finally at least one main reference in the scientific literature.

This section is under construction, and it is expected that the number of videos treated will increase progressively.

1 – Robots with AI – Cognitics in Practice (2007-2013)

Extracts from ”Robocup at Home” Competitions and Other Experiments – Video(s)

The video presents several of our robots with high degree of cognitive capabilities and real-world effectiveness, mostly in cooperation with humans.

Our group of robots includes prototypes entirely designed at our university (Piaget environment for programming, development and operational supervision, RH-Y and OP-Y robots) as well as the humanoid NAO, reconfigured and integrated into our Piaget environment, as a mediator between humans and other machines (Nono-Y).

This video is a “clip” of about four minutes, gathering extracts of longer videos, each one dealing with tasks particularly representative of what is expected from a domestic assistance robot, notably as practiced in the international competition “Robocup at Home” [1.1]. The skills demonstrated are numerous, and include the following elements:

- Communication verbale (compréhension et expression)

- Verbal communication (comprehension and expression)

- Gestural control (understanding and expression)

- Compliant and adaptive controls (force and presence guidance)

- Mobility and localization

- Object grasping

- Object transport

- Teaching and learning by example (“programming”)

- Perception, pattern recognition and decision-making capabilities

- Capabilities for autonomous action

- Semi-autonomous operation capabilities (cooperative or adaptive modes)

- Guided learning capabilities (“client/server” mode)

- Autonomous navigation/movement without collision

- Cultural sharing (association of names with places and objects)

- Ability to estimate values and make strategic and tactical changes (“emotions”)

The video is entitled “Group of Cooperative Robots for Home Assistance (RG-Y, RH-Y, OP-Y, and Nono-Y), with Cognitive Capabilities Ensured by our Piaget Environment”. Eight sequences are visible; the first six of them were captured during official world level “Robocup-at-Home” competitions, in 5 different countries [1.2]; the last two sequences were mainly taken in our research laboratory, at the HESSO.HEIG-VD, and demonstrate additional interesting functionalities:

- « Copycat », Robocup@Home Test-Task (Atlanta, USA). This sequence demonstrates the possibility of teaching a robot new movements and tasks, simply by showing them, by having a human performing these tasks in a natural way in front of the machine. The robot reproduces by itself what it can observe in the scene.

- “Fast Follow”, Robocup@Home Test-Task (Suzhou, China). This sequence demonstrates the possibility of guiding a robot at home, just by walking naturally in front of it or by piloting it without contact by hand gestures. The goal of this task is a fast, “flowing” movement, and the guide-robot “tandem” must pass another one without being too disturbed (in particular, without unfortunate robot swapping); a bonus is given to the fastest tandem; here, to ensure safety, the guide turns around and watches the movements of his robot

- “Walk and Talk”, Robocup@Home Test-Task (Graz, Austria). The sequence demonstrates the possibility of teaching new topologies and paths at home by guiding the robot through it, and vocally associating specific names to key locations.

- “Open Challenge”, Robocup@Home Test-Task: Gesture control and Crab Gait (Graz, Austria). The sequence demonstrates the possibility of controlling a robot at a distance, by gestures, as well as the possibility of lateral displacement for a mobile robot. Ironically, it is here another robot (RH-Y) that operates the control gestures, at about 1 meter distance. Our OP-Y robot perceives the gestures by Lidar, and, for its own movements, laterally, it exploits our omnidirectional platform with 4 wheels and independent suspensions as well as the particular geometric structure of Mecanum wheels.

- Concept for Robust Locomotion on Uneven Grounds and Stairs (Simulation and interactive Control using Webots). The sequence demonstrates, in a graphically and physically simulated world that can be controlled in real time, a robust locomotion concept on uneven floors and stairs. The aim was to extend the robust locomotion capabilities of our OP -Y robot beyond flat floors, using innovative kinematics and Webots software.

- “Open Challenge”, Robocup@Home Test-Task: Domestic Assistance in Cooperative Human and Multi Robot Group (Singapore). The clip reports on an experiment where a group of robots brings a beer and snacks to Daniel, demonstrating natural Human-Robot communication, multirobot cooperation and mediation between human and machines, with NAO humanoid, all integrated in our Piaget environment for system development, programming, and intelligent control in the real-world.

- Compliant Motion Control for Cooperating, Mobile Domestic Robot (Yverdon-les-Bains, Switzerland). The sequence demonstrates the smooth movement of a mobile and cooperative home robot. In addition to our programmed and non-contact guidance modes, our RH-Y robot can be driven in compliant mode for precise and natural positioning tasks.

- Multimodal Control (Geneva and Yverdon-les-Bains, Switzerland). Control of our RH-Y, OP-Y and NAO, robots and humanoid, can be switched in real time to one of three different modes, autonomous, cooperating or server modes, featuring various instantaneous levels of cognitive performance. In our TeleGrab demo, RH-Y robot is remotely supervised by a human, possibly with reduced mobility, with multiple possibilities based on our Piaget environment, to effectively grasp an object and carry it to a freely chosen location.

- Final note. Our systems integrate numerous contributions from science, technology and commercial systems, which cannot be all quoted here but are nevertheless gratefully acknowledged.

Let us note that quantitative criteria are necessary to judge the presence or absence of a competency, and even more so to characterize its intensity. When these quantitative criteria are of a physical nature, and thus relate mainly to the real, their use is fairly commonplace. But in the domain of the imaginary and the cognitive, the situation is more challenging. For this purpose, two types of indicators have their merits: the first type gathers the ad hoc indicators defined by the rules of the Robocup competition [1.2]; the second type of indicators corresponds to the metric proposed in our theory of cognition MCS [1.3], which then brings a more general alternative. Let us recall in this respect that there are points of passage allowing us, starting from the real world, to enter an imaginary and cognitive world that corresponds to it; we can see there the evidence of the fundamental concepts, in particular, of permanence and change, of probability, of goal, of modeling and of interaction; this leads in a rather direct way to the very important notions in the cognitive domain that are time, information, values, definitions and the collective.

Fig. 1 Robots with AI – Cognitics in Practice. Extracts of longer videos, each one dealing with tasks particularly representative of what is expected from a domestic assistance robot, notably as practiced in the international competition “Robocup at Home”

References for video 1

[1.1] Jean-Daniel Dessimoz and Pierre-François Gauthey, « Group of Cooperating Robots and a Humanoid for Assistance at Home, with cognitive and real-world capabilities ensured by our Piaget environment (2007-2013, with RG-Y, RH-Y, OP-Y and Nono-Y robots, at HESSO.HEIG-VD) », video competition, IJCAI-13, Twenty-third International Joint Conference on Artificial Intelligence, Tsinghua University, Beijing, China, August 3-9, 2013. The 4 min. clip made in 2013 shows an overview of our developments for cooperating robots, with cognitic capabilities and sequences taken while participating in world-level competitions ». Click to play the video (v013.05.24) on YouTube

[1.2] Daniele Nardi, Jean-Daniel Dessimoz, et al. (16 authors), « RoboCup@Home; Rules & Regulations », Robocup-at-Home League, Rulebook (Revision: 164M)/ Final version for RoboCup 2011, Istanbul, Turkey, 60pp., 29 June 2011; click here for the paper (.pdf, ca 2.6 Mb).

[1.3] Jean-Daniel Dessimoz, « Cognition and Cognitics – Definitions and Metrics for Cognitive Sciences, in Humans, and for Thinking Machines, 2nd edition, augmented, with considerations of life, through the prism “real – imaginary – values – collective”, and some bubbles of wisdom for our time », Roboptics Editions llc, Cheseaux-Noreaz, Switzerland, 345 pp, March 2020. Electronic version: ISBN 978-2-9700629-4-3, Printed version: ISBN 978-2-9700629-3-6 . https://www.roboptics.ch/editions-english/ .

2 – Robotics and Natural Emotions (2017)

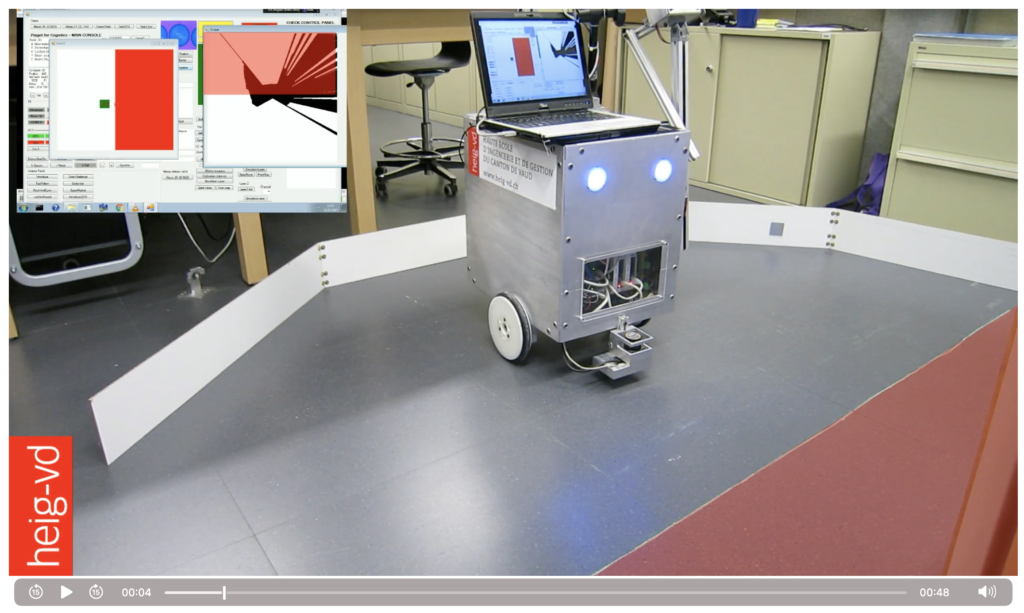

Autonomous Robot Navigates in Real and Imaginary Worlds

The video presents a robot that demonstrates its ability on the one hand to navigate under a combination of constraints related to both the real world and an imaginary world, and on the other hand according to a pattern typical of emotions [2.1].

The robot in action is RH-Y, one of our robots with a high degree of cognitive ability and effectiveness in the real world. (In addition, RH-Y can also work cooperatively with humans in many applications; to be clear, however, the latter ability is not used in this video, as autonomy is complete in this demonstration.)

Here, the robot makes a random path, avoiding both collisions with physical objects (e.g. walls), and access to “forbidden” areas (defined in a digital map, and represented in red on the figure).

The context of this demo corresponds to our MCS theory cognition [2.2], and in particular to the notions of value, value estimation, and emotion. Here, the walls (real objects) and the forbidden zone (defined as an imaginary object) have a negative value, and are interpreted as threats, while the free zones appear as opportunities.

Natural emotions, and similarly, their equivalent in robot behavior, correspond to strategic, possible and sudden changes, typically resulting from changes in surrounding threats and opportunities, which implies a continuous evaluation of the circumstances, and a certain capacity to react accordingly [2.3]. In the video, the robot illustrates these principles; on the one hand the robot pivots to avoid obstacles, and on the other hand it moves forward when it has the opportunity.

Fig. 2 Robotics and Natural Emotions. Our RH-Y robot moves forward when it has the opportunity to do so, and pivots to avoid the threats it encounters: physical or virtual obstacles (real white mini-walls, or imaginary forbidden zones, illustrated in red on this picture) [2.1]

References for video 2

[2.1] Rishabh Madan, Pierre-François Gauthey, and Jean-Daniel Dessimoz, “Free navigation using LIDAR, virtual barriers and forbidden regions”, 48s video, HESSO.HEIG-VD, Yverdon-les-Bains, Switzerland, ca. 30 Mb, made on 2017.07.19, and in particular shown at the 1st International Workshop on Cognition and Artificial Intelligence for Human-Centred Design 2017, co-located with 26th International Joint Conferences on Artificial Intelligence, IJCAI 2017, Melbourne, Australia, August 19, 2017. To download the video (mp4, ca. 30Mb), click here; to download the associated presentation (ppt, ca. 40Mb), click here; to download the presentation in pdf format (pdf, ca. 4Mb), click here. The paper can be freely downloaded from CEUR worksite (paper #8 of Proceedings: click here) .

[2.2] This reference is identical to reference [1.3] (MSC Theory of Cognition )

[2.3] J.-D. Dessimoz, ”Natural Emotions as Evidence of Continuous Assessment of Values, Threats and Opportunities in Humans, and Implementation of These Processes in Robots and Other Machines”, Proceedings of the 1st International Workshop on Cognition and Artificial Intelligence for Human-Centred Design 2017, co-located with 26th International Joint Conferences on Artificial Intelligence, IJCAI 2017, Melbourne, Australia, August 19, 2017. Edited by Mehul Bhatt and Antonio Lieto, pp. 47-55. To download the presentation (ppt, ca. 40Mb), click here; to download the presentation in pdf format (pdf, ca. 4Mb), click here. The paper can be freely downloaded from CEUR worksite (paper #8 of Proceedings: click here)

3 – Industrial Robotics and Classical AI (2018)

Yumi plays Connect 4 in Real World – Video

The video “Yumi plays Connect 4 in the Real World” [3.1] presents an example of a real-world application.

Superficially, the theme seems to be mainly typical AI, in the popular sense, since it is about a machine behaving like a human in a widely used board game, ” Connect 4 “, involving logic skills, in a similar way to chess or Go.

More fundamentally, this application involving automated cognitive processes, i.e. cognitics (including intelligence), has the double merit of avoiding the drift towards a mysterious “general AI” postulated by some, and of going beyond the stage of the algorithm and the confinement in cognition, to integrate in the real world, with a consideration for values and the collective. Moreover, this integration is done according to industrial standards, using both hardware and software tools corresponding to best practices.

The video shows that the ABB Yumi industrial robot, configured and programmed in a way that is our own, observes, thinks, places its pieces and finally puts the game away at the end of the game.

The Laboratory of Robotics and Automation (LaRA) has acquired and installed various resources, as well as developed a level of supervision to coordinate the whole, including control of robot movements, and visual analysis of scenes, within the framework of typical applications of the industrial context. In this particular case, it is a humanoid collaborative robot.

This application brings a concrete complement, in terms of experimentation and validation, to our MSC theory of cognition, notably exposed in a book [3.2]. A brief description of the video is also available as a downloadable poster [3.3].

Fig. 3 Industrial Robotics and Classical AI. Extracts of the proposed “Yumi plays Connect 4 in Real World” video, machine versus human. (In the background, 8 of our autonomous mobile robots can be seen above the cabinets; they are all veterans of international robotic competitions in the “Eurobot” framework) [3.1]

References for video 3

[3.1] Pierre-François Gauthey and Jean-Daniel Dessimoz, “Yumi Connect 4 – Puissance 4 Video”, 4.07 min., ca. 39 Mb, 2018.04.03. Shown in real at “Portes Ouvertes”, HESSO.HEIG-VD, Yverdon-les-Bains, 16 March 2018. On YouTube : www.youtube.com/watch?v=Qu8paGmnVok , last access 20 Oct. 2021. Or directly on our servers : click here to download HEIG-VD video (avi, ca. 39 Mb).

[3.2] This reference, related to the MSC Theory of Cognition, is identical to reference [1.3].

[3.3] Jean-Daniel Dessimoz et Pierre-François Gauthey , « Yumi Plays Connect 4 in Real World », Example of application in real world; ABB Yumi robot watches, thinks, places its pawns and finally, tidies up, when game is over. Poster, 21st Swiss Robotics Days, org. by Robot-CH, the Swiss association for promotion of robotics, at Y-Parc, Yverdon-les-Bains, 13-14 April 2018; click here to download the poster in pdf format (ca. 6.6Mb); click here to download the corresponding HEIG-VD video (avi, ca. 39 Mb).

4 – Robotics and Cooperation with Humans (2015)

Signs – Gesture-controlled assistive robot

The proposed video is based on the sequence dealing with our RH-Y assistance robot, here controlled by gestures [4.1], which was broadcast at the end of a program of the “Signes” series of the French-speaking Swiss Television (RTS).

In the sequence that concerns us, it is an example of a real-world application of an “intelligent” machine, or in more general terms, a machine with advanced cognitive capacities, quantifiable in cognitive terms [4.2].

At the heart of the program, the director proposes a series of experiments: “We are not just (deaf) guinea pigs, do the deaf see better than the people who can hear? To answer this question, our colleagues from ” L’œil et la Main ” have chosen to invest a vast hangar transformed into a scientific laboratory and to conduct a series of experiments.

To evaluate the advantages and limitations of gestures for communication, also in the case of robots, the context involving hearing impaired people is interesting.

Natural communication between humans includes language in a very important way. A language-based mode of communication has its advantages and limitations, which are discussed in turn, especially in relation to the gestural mode.

- Verbal communication. The great advantage of language is the very abstract modeling of the world that it implies, and its conventional character which allows a certain communication between members of the same culture, of the same group. Typically, the simplicity is then very great, compatible in particular with an oral coding (it is for example well established that the information flow in an ordinary oral conversation is about 30 bit/s). Moreover, speech is largely omnidirectional, and any obstacles are often, for the most part, transparent to sound waves.

- Gestural communication. Gestural communication brings the great advantage of a very large information flow compared to verbal communication. For humans, the perception is then done through the visual channel, and for machines, in particular for our RH-Y robot on this video, through a Lidar (fast and precise measurement, in a light plane; phonic, optoelectronic, or two-dimensional time-of-flight channels are also sometimes used on RH-Y, but in general for this type of application, they are less reliable or economically less preferable, at the current stage). Such a communication capability is especially necessary for efficient guidance when it comes to mobility ( i. e. waving, or e. g. taking by hand).

The case of deaf people is interesting: of course, without a speech channel, verbal conversation via sound is in principle not possible. But insofar as these people can compensate with the visual sense, which is very often the case, it becomes almost an advantage because the capacity of the visual channel is much greater than that of the audio channel. And even if one wishes to find the level of abstraction and thus the simplicity of language, this time via the visual channel, an ad hoc coding can also be defined in visual terms for a given group (sign language). In the latter case, it is then the hearing people who turn out to be “deaf”, as long as they do not learn this gesture language, this culture, as long as they do not join the group.

In this demonstration, the experience gained with the technicians of the television team confirmed once again the ease of gesture control of a robot. In just a few minutes, without any further preparation, people can learn to guide the RH-Y robot without touching it ( i. e. to start or stop it, to move it forwards or backwards, and to turn it on the spot; on this basis, it can be sufficient for the user to walk in front of the robot and the latter will follow him/her).

Fig. 4 Robotics and Cooperation with Humans. Our RH-Y robot can be controlled or guided by simple gestures, as illustrated in this sequence broadcast on the RTS, as part of the program “Signes” which has as its theme the hearing impaired [4.1].

References for video 4

[4.1] Monique Aubonney, « Commande gestuelle pour guider le robot d’assistance RH-Y », interview de J.-D. Dessimoz et démonstration au Laboratoire de Robotique et Automatisation (iAi-LaRA), HESSO.HEIG-VD, Yverdon-les-Bains, 4 déc. 2015, vidéo mp4, 5 min., 334 Mb, publiée dans «Signes», Stéphane Brasey, Réalisateur – producteur, Émission de la Radio Télévision Suisse, Genève, Suisse, diffusée le 19.12.2015 (dès min. 25). Cliquer ici pour accéder directement à l’émission. Cliquer ici pour télécharger l’émission complète (mp4, 30 min. env. 300 Mo) ; accédé le 19 nov. 2021. Cliquer ici pour télécharger l’extrait correspondant à la démonstration (mp4, 5 min., env. 350 Mo) ; accédé le 20 nov. 2021.

[4.2] This reference, related to the MSC Theory of Cognition, is identical to reference [1.3].

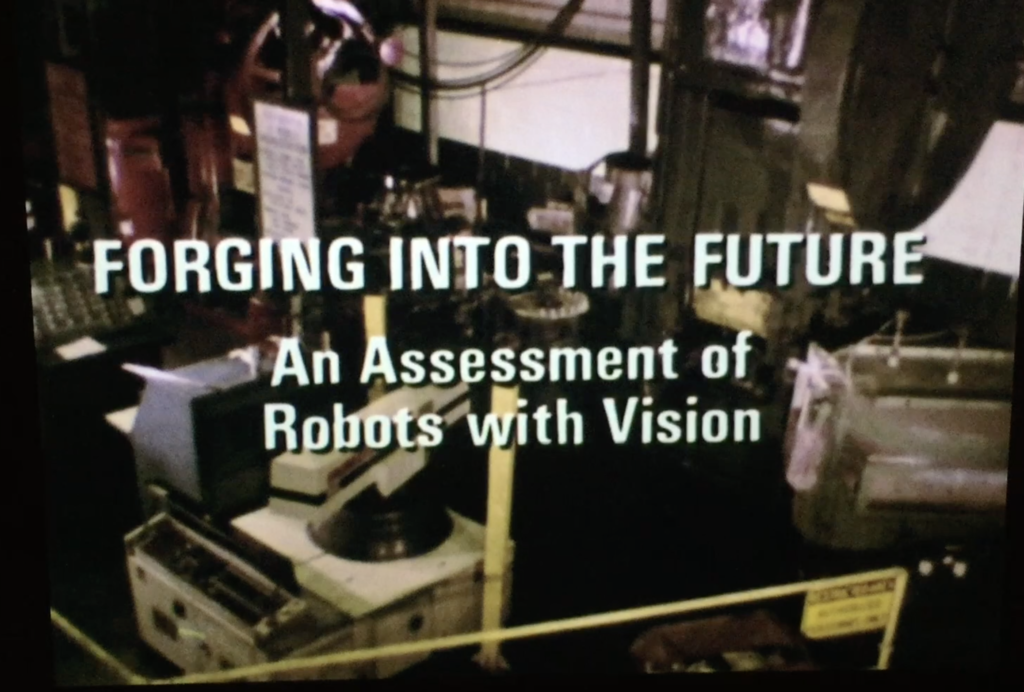

5 – Robotics with Vision for Industrial Application, including Bin Picking (1981)

Robot with Machine-based Vision for Industrial Application in Forging Factory

The proposed video is based on a 16 mm film produced by the American company General Electric (GE) and documenting a study commissioned at the University of Rhode Island (URI) [5.1].

The Robotics Research Group (RRG) of URI was the first institute funded by the US National Science Foundation (NSF) in the field of robotics. And the advances in this field have led to the prospect of concrete and positive benefits for society.

Accordingly, with a view to replacing human workers with machines, GE determined that the industrial task most representative of the current priority technical challenges in their workshops was the production of turbine blades for jet engines: Starting with a metal cylinder, the process includes heating, pressing, and cutting operations, with the necessary handling between related specialized machine tools.

The video demonstrates the feasibility of carrying out this task by our robot, which is equipped with vision and advanced gripping tools (sort of “hands”).

In scientific and technical terms, in the case of this application, a particular challenge is posed by buffer stocks between machines, simply in bulk (in bins), which then requires picking up parts in a largely random position, what is more, with variable shapes (in our case, at the discharge of some presses). Relevant elements to address this type of challenge have been the subject of multiple scientific publications (notably [5.2 and 5.3]).

For a more comprehensive understanding of applications of this type and more, it is useful to refer to our theory of MSC cognition [5.4].

Fig. 5 Robotics with Vision for Industrial Application, including Bin Picking. In a General Electric film, our Mark-1 robot developed at the Robot Research Group at the University of Rhode Island (USA) demonstrates the feasibility of automating demanding manufacturing processes, including the handling of parts piled out of order in cases [5.1].

References for video 5

[5.1] “FORGING INTO THE FUTURE, An Assessment of Robots with Vision” , video of General Electric, for a Study done at the University of Rhode Island, by the Robot Research Group (J. Birk, R. Kelley, J.-D. Dessimoz, H. Martins, R. Tella, et al. ) , Spring 1981, USA, 7.5 min. ; initially 16mm film, then digitized; click here to download the video (mp4, 723 Mb); last accessed 21 Nov. 2021.

[5.2] R. Kelley, J. Birk, J.-D. Dessimoz, H. Martins and R. Tella, « Forging: Feasable Robotics Techniques », 12th Int. Symp. on Ind. Robots, Paris, June 9-11, 1982, pp59-66.

[5.3] J.-D. Dessimoz, J. Birk, R. Kelley, H. Martins and Chih-Lin I, « Matched Filters for Bin Picking », IEEE Trans. on Pattern Analysis and Machine Intelligence, vol PAMI-6, No 6, New-York, Nov 1984, pp.686-697

[5.4] This reference, related to the MSC Theory of Cognition, is identical to reference [1.3].

6 – Robotics with Vision for Industrial Application, including Handling of Overlapping Parts (1979)

Robot with Machine-based Vision for Picking Industrial Workpieces

The two proposed videos are based on a 16 mm film documenting a study supported by a federal research fund (Commission pour l’Encouragement de la Recherche Scientifique – CERS) at the Signal Processing Laboratory (LTS), belonging to the Swiss Federal Institute of Technology in Lausanne (EPFL), Switzerland [6.1-3].

The research in the field of visual signals was aimed at a good understanding of the cognitive problems linked to computer vision (artificial vision) and, in the long term, at concrete and positive repercussions for society, in particular for robot control, with a view to the automation of industrial production processes.

The filmed demonstrations validate the approach consisting in detecting contours in images, processing them in order to characterize them in terms of parameterized curvature, and then comparing them by correlative methods to previously learned references, which makes it possible to recognize and localize perceived objects, even when they are only partially visible. The first video documents the more favorable cases, where the parts do not overlap, and therefore a very good contrast is possible between parts and substrate [6.2], while the second documents the case of overlapping parts, where the contrasts are necessarily weaker, and the parts are very often only partially visible [6.3].

These research results, including the film, have been published in the scientific community [incl. 6.4 and 6.5].

Fig. 6 Robotics with Vision for Industrial Application, including Handling of Overlapping Parts. Our videos [6.1] document a computer vision approach to recognize and localize industrial workpieces, with theoretical presentation and validation tests, including a computer, an image digitizer with real-time contour extraction [6.6], as well as a camera and a robot.

References for video 6

[6.1] Jean-Daniel Dessimoz and Eric Gruaz, “Robot with Vision for the Recognition and Localization of Industrial Mechanical Parts”, videos, 1979, Signal Processing Lab (LTS), Swiss Federal Institute of Technology (EPFL), Lausanne, Switzerland; initially 16mm film (as presented at 9th ISIR, Washington), then 8mm, and finally digitized in 3 videos: part 1 [6.2], part 2 [6.3], and integrated version consisting in parts 1 and 2, along with [6.4].

[6.2] Part 1 of [6.1] : Case of non-overlapping workpieces, 6.5 min.: “Recognition and Localization of Multiple Non-overlapping Industrial Mechanical Parts” , video, Jean-Daniel Dessimoz and Eric Gruaz, 1979, Signal Processing Lab (LTS), Swiss Federal Institute of Technology (EPFL), Lausanne, Switzerland; 6.5 min. ; initially 16mm film, then 8mm, and finally digitized; https://roboptics.ch/f/p/LaRA/Publications/1979 ISIR – USA/1979 Robot with Vision – picking non-overlapping workpieces.mp4 last accessed Oct. 20, 2021

[6.3] Part 2 of [6.1]: Case of overlapping workpieces, 6 min.: “Recognition and Localization of Overlapping Industrial Mechanical Parts” , video, Jean-Daniel Dessimoz, 1979, Signal Processing Lab (LTS), Swiss Federal Institute of Technology (EPFL), Lausanne, Switzerland; 6 min. ; initially 16mm film, then 8mm, and finally digitized; 542.2 Mb; https://www.roboptics.ch/f/p/LaRA/Publications/1979%20ISIR%20-%20USA/1979%20Robot%20with%20Vision%20-%20picking%20overlapping%20workpieces.mp4 last accessed Oct. 20, 2021.

[6.4] J.-D. Dessimoz, M. Kunt, G.H. Granlund and J.-M. Zurcher, « Recognition and Handling of Overlapping Parts », Proc. 9th Intern. Symp. on Industrial Robots, Washington DC, USA, March 1979, pp. 357-366; incl. following video (part 3 of [6.1]) : https://roboptics.ch/f/p/LaRA/Publications/1979 ISIR – USA/1979 Robot Vision – industrial application.mp4 . The paper is avaialable at the following URL: https://roboptics.ch/f/p/LaRA/Publications/1979 ISIR – USA/1979.03.jj Recognition and Handling of Overlapping Parts.pdf last accessed Oct. 20, 2021.

[6.5] J.-D. Dessimoz, « Traitement de Contours en Reconnaissance de Formes Visuelles: Application à la Robotique », Thèse de Doctorat, Ecole Polytechnique Fédérale de Lausanne, Sept. l980, pp150. Thèse (pdf, 3.9 Mb) et Infos at EPFL

[6.6] P. Kammenos et J.-M. Zurcher, ” Polar encoding based workpiece localisation and recognition and robot handling”, Proc. 8th Intern. Symp. on Industrial Robots, Stuttgart, Germany, May 30-June 2 1978.